Building a Scalable Computer Vision Pipeline for Intelligent Transportation (ITS) Using Kafka

The adoption of pan-tilt-zoom (PTZ) CCTV cameras in intelligent transportation systems is on the rise, driven by declining costs and enhanced capabilities. In certain instances, roads spanning hundreds of kilometers are equipped with CCTV cameras placed every 200–500 meters, ensuring comprehensive road coverage.

While CCTV cameras provide an effective way for operators to monitor roads and rails, the growing number of PTZ cameras across transport networks has increased the work and cognitive burden for staff. How can the latest advancements in artificial intelligence, computer vision, and digital technology help reduce this burden?

Introduction

Cameras have been in use on road and rail networks for a number of decades, being deployed in areas where it is necessary or operators to have a view of road conditions. In the past, when cameras were expensive and only a few could be purchased, they would be strategically placed at high-risk areas such as junctions, slip roads, toll booths, and tunnels for optimum coverage.

These legacy cameras would normally be analog with low resolution and fixed in position with no zoom capabilities or motors for movement, which meant operators would only have limited visibility of roads. If an incident happened outside of the camera’s field of vision (FOV), then there was not much that the operator could do apart from waiting for a patrol to arrive on the scene and assess the situation.

Fast forward to today, and many stretches of the road now have multiple sophisticated HD cameras for operators to monitor and understand what is happening in real-time, as well as prioritize the allocation of resources. These cameras are no longer fixed in position but offer pan-tilt-zoom capabilities, which means they can rotate 360 degrees, move up and down, and zoom in and out. They also leverage 4G/5G technologies to negate the need for expensive networking infrastructure such as fiber optics.

Computer Vision Use Cases for Intelligent TransportSystems (ITS)

· Computer vision is one of the most valuable data sources for intelligent transport systems and digital twins. It provides rich and relatively accurate insights for many domains and use cases, for example:

· Event detection: Detecting events in real-time. For example, hazards, stopped vehicles, wrong-way driving, pedestrians/animals on the road

· Risk prediction: Detecting near misses such as harsh braking, antisocial driving

· Weather data: Fog, rain, wind, ice/snow

· Queue length and junction optimization

· License plate recognition

· Traffic flow: Vehicles flow + speed+ classification

· Security and vandalism

· GIS: Location and condition of assets such as signs, road markings, potholes, and crash fences.

As much as computer vision is compelling, it is also very challenging:

Cameras don’t work very well at night time unless they have special IR illumination

There are many brands and models for cameras, with many differences in protocols, compression types, interfaces, lens types, and capabilities. Some places still use analog cameras for monitoring while others use sophisticated CCTV cameras with edge processing.

· Processing of video is complex and expensive

· The accuracy also drops in poor weather conditions such as fog, rain, snow, and strong wind.

· PTZ Cameras add a significant challenge as well to computer vision, and I will discuss this later in detail

Computer vision pipelines

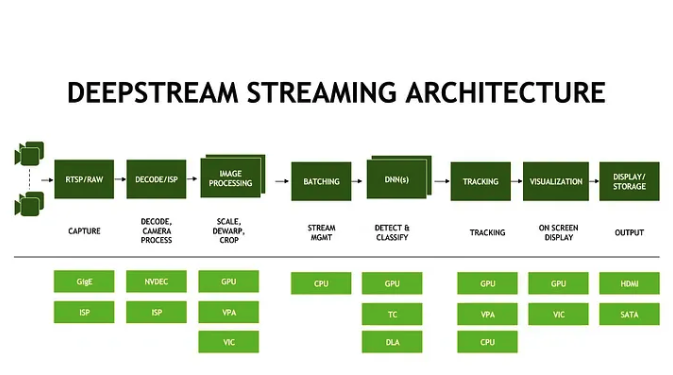

Computer vision pipelines for intelligent transport normally include some or all of the following steps:

· Integrating with the camera feed

· Pre-processing the video: scaling down the resolution/ FPS, noise correction, and color correction.

· Decoding the video: from H264 to raw images

· Masking the video to remove areas that are not interesting

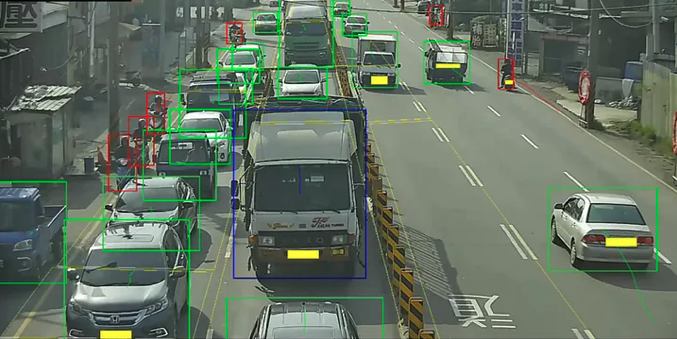

· Detecting features, objects, 2D/3D or areas(segmentation) in each frame, background/foreground separation

· Classifying the objects (semantic labeling)

· Geolocating the objects: from image space toGIS space

· Tracking the objects using feature matching and dynamic models

· Analyzing the scene: trajectories, applying certain rules, searching for anomalies

· Extracting events and insights and raising alerts

· Adding overlays to the video +encoding it back in multi resolutions to simplify viewing

With all of these steps, it’s important to note that AI and computer vision is still very limited and don’t match the depth and sophistication of the human brain.

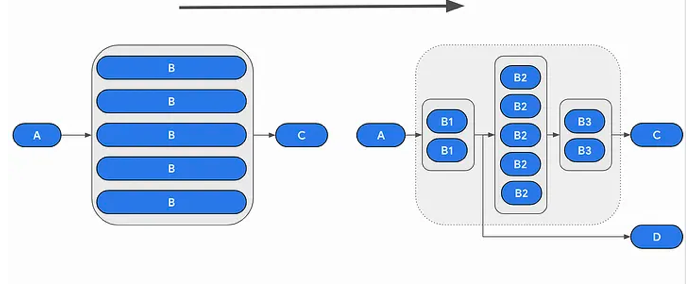

Generally, there are a few approaches of how to implement computer vision pipelines:

Tenancy model

· Pipeline / Camera (Single tenant).Each camera has a dedicated pipeline that includes all of these steps

· Pipeline / Many cameras(multi-tenant). One pipeline handles the processing for many cameras and the compute resources are shared across all cameras.

Rigid vs Agile

· Rigid pipeline: harder to add functionality as any change requires full testing and is limited by the CPU and Memory that is allocated to the pipeline.

· Agile pipeline: Easier to add new functionality and local changes don’t have a ripple effect.

Rigid-single tenant pipelines are mostly common in edge devices while Agile-Multi-tenant ones are more popular in cloud/data center deployments as you can scale and tailor each step with the right resources.

Obviously, you can use a hybrid approach where some steps are single tenants while others are shared. Or Hybrid Multi-tenant where some steps are performed on-premise while the rest are in the cloud.

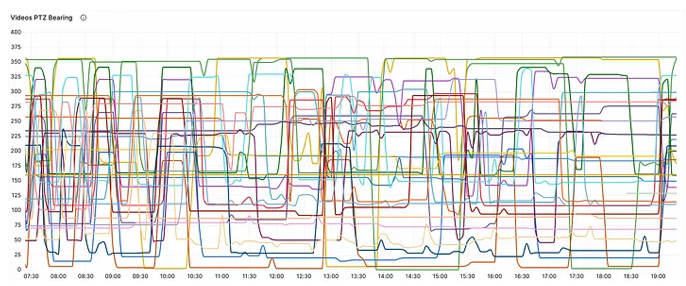

The PTZ Challenge

Transport operators would almost always preferPTZ CCTV Camera over a static one. PTZ increases the area coverage and allows them to zoom in on events miles away and eventually install fewer cameras.

However, for computer vision purposes, PTZ is a nightmare. For example,With a fixed image, it is possible to “mask” or manually label areas and objects in the scene — for instance, a junction, a bus stop, a carriageway, ora bridge — that helps the computer vision algorithms focus on specific locations in terms of detecting and classifying events. With a moving PTZ camera, this becomes a challenge as it is not possible to define areas in the image as it constantly changes, and therefore the baseline “state” of the algorithm needs to change.

Another example is traffic speed measuring. Speed is measured by measuring the time it takes a vehicle to pass a fixed—known distance, however, if the PTZ parameters of the camera change, these distances are not accurate anymore, and this can increase or decrease the speed significantly.

And lastly, geo-locating an event in the image space is challenging with static cameras as it is and requires manual calibration, but with PTZ cameras that zoom and rotate freely and have unknown lens distortion and perspective,

PTZ Opportunities

PTZ also presents opportunities for computer vision technology, for example, the system can control the camera automatically and point it to areas with high risk. Or initiate a repetitive scan of the area to actively detect hazards.

Computer VisionPipelines over Kafka

Before we discuss architecture concepts for implementing computer vision pipelines, we need to map the technical requirements

Computer Vision Pipelines Requirements

· Scalable: Can scale to support 1000’s cameras

· Realtime: The entire processing needs to complete within seconds

· Resilient: highly available system

· Open: Support for major CCTV protocols: RTSP, H264,MJPEG, ONVIF.

· Bypass: If some cameras already include some steps (i.e. object detections), they can bypass these steps and push the data to the middle of the pipeline

· Decoupled: Clear separation of concerns and functionality. Individual components can be updated without affecting the entire system. For example, let’s say the pipeline uses YoloV1 for object detection, upgrading the object detection step to use YoloV10 would not create a shock wave that affects the entire system.

· Modular: Easy to add new processing capabilities and models

· FAIR: data is findable, accessible, interoperable, and reusable

· Affordable: Use economy-of-scale techniques. For example use the right hardware for the right task: GPU (Nvidia TensorRT, CUDA)for AI models, but other tasks might be more efficient to run on a CPU.Auto-scaling: scale variable load tasks like for instance the tracker, up onrush hour and down on night time. Choose the right video resolution and FPS to achieve a balance between performance and cost.

· Upgradeable: No down time upgrade of core and services

Kafka

Kafka is an open-source, real-time, mature, resilient, and scalable streaming platform that is used by many tech companies to stream events and data between services, and even though it’s hard to tune, it’s the only solution that can deliver the desired requirements above.

Solution Concepts

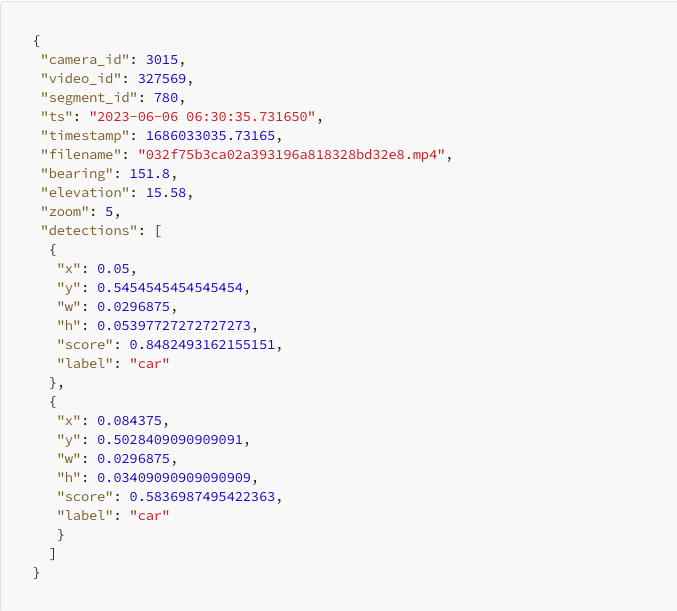

Kafka is the source of truth! All data, raw, processed, and enriched, should first be available on Kafka and then routed to the right service.

Video and images: Even though Kafka can support video and images, and as we want it to be the source of truth, it’s desirable to store video and images on Kafka itself. However, tuningKafka to support rich multimedia is hard. So an easier approach might be to store them externally on a shared file system and provide a reference in theKafka message.

Microservice architecture: tasks should be implemented as stateless or stateful dockerized microservices and orchestrated using frameworks such as Kubernetes.

Break processing into small steps, each in its own agent. Complex processing can create many issues: You can’t reuse intermediate results for other needs, you don’t have good observability of the process, they are harder to debug, and inefficient to scale.

Digital Twins: In addition to computer vision, Kafka can stream data from other sources i.e.sensors, weather services, radars, and connected vehicles, and these datasources can either assist the computer vision tasks or fuse with them to achieve more complete, accurate, or rich insights.

Kafka:Use the right Kafka partitioning and replication factors to ensure scalability and resilience. For example, you can partition Kafka geographically or logically / camera.

KafkaLag: Monitor Kafka lag to guarantee the level of service and end-to-end latency. Use the lag to autoscale services.

SchemaManager: Use schema manager to manage schemas across the entire system both for JSON andAVRO.

A/B Testing: Kafka simplifies the testing of new features and capabilities. Bringing up a parallel branch of certain tasks and comparing the results in real time between the main branch and the test branch becomes a simple and achievable task.

Summary

Scaling computer vision pipelines to handle 1000s of PTZ CCTV cameras isa complex engineering task and requires skills, planning, and good experience in both software architecture and computer vision. When combined the right way as part of a full digital twin system for intelligent transportation, this can lead to a powerful and sophisticated system, that is getting closer to what operators expect these days, after listening for years to all the promises of big data, AI, cloud technologies.

See Lanternn by Valerann™ in action – book a demo.

Book a Demo

.png)